One core Unix idea was to have a toolkit of sorts, with many little tools and utilities that would do one thing each and do it well. They were designed for a monolithic system environment, working nicely by and among themselves, and with sufficient documentation and experience, the end user would be very productive.

Then other implementations of Unix arose, and they were not fully compatible. You may still see this sometimes when building software today based on Makefiles and shell scripts. For a start, there are many different shells.

The default system shell and make are used heavily in many Unix style systems.

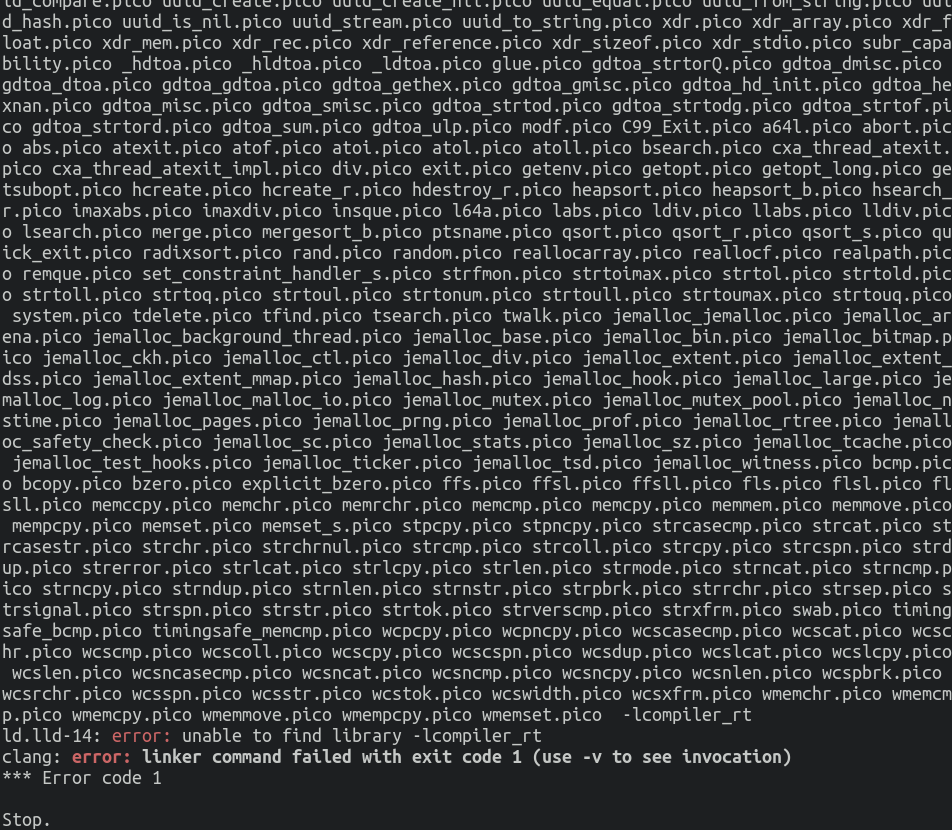

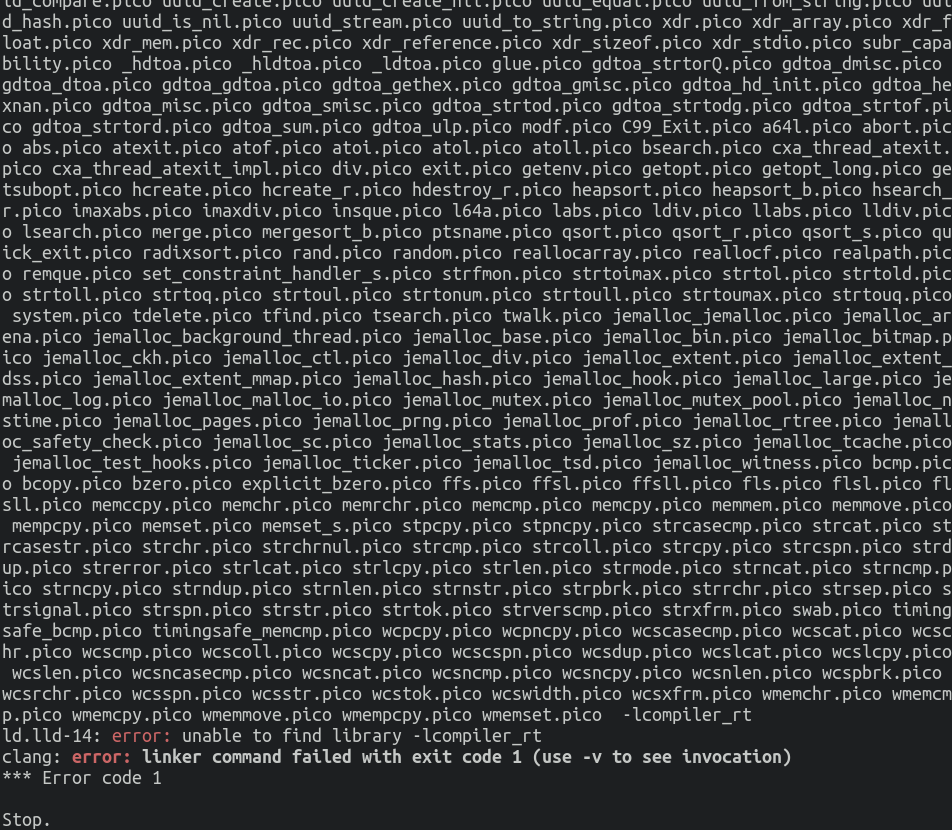

Both have their own little built-ins and people have created hacks to cater for advanced logic, such as comparing (partial) strings and actual numbers. Speaking of which: Unix style systems are based on text processing so much that build systems show symptoms of the limitations of make and shell scripts. While they can be simple, few lines of instructions to the system, larger projects have grown Makefiles and scripts to hundreds or even thousands of lines and dozens to hundreds such files. Following along is extremely hard, especially to the end user trying to debug them. And it all falls apart very easily as soon as extra tools are in use for which there are different implementations. Beyond the make command and shells themselves, examples are sed, awk, and many more of the common Unix tools. That creates a problem for portability, so some build systems start with a bootstrapping process that builds such tools they rely on. However,

even the bootstrapping part has the same problem again, so developers need to be extremely careful to only use commands and syntax for bootstrapping that are available on all sorts of platforms and implementations out there, which is again hard to anticipate.

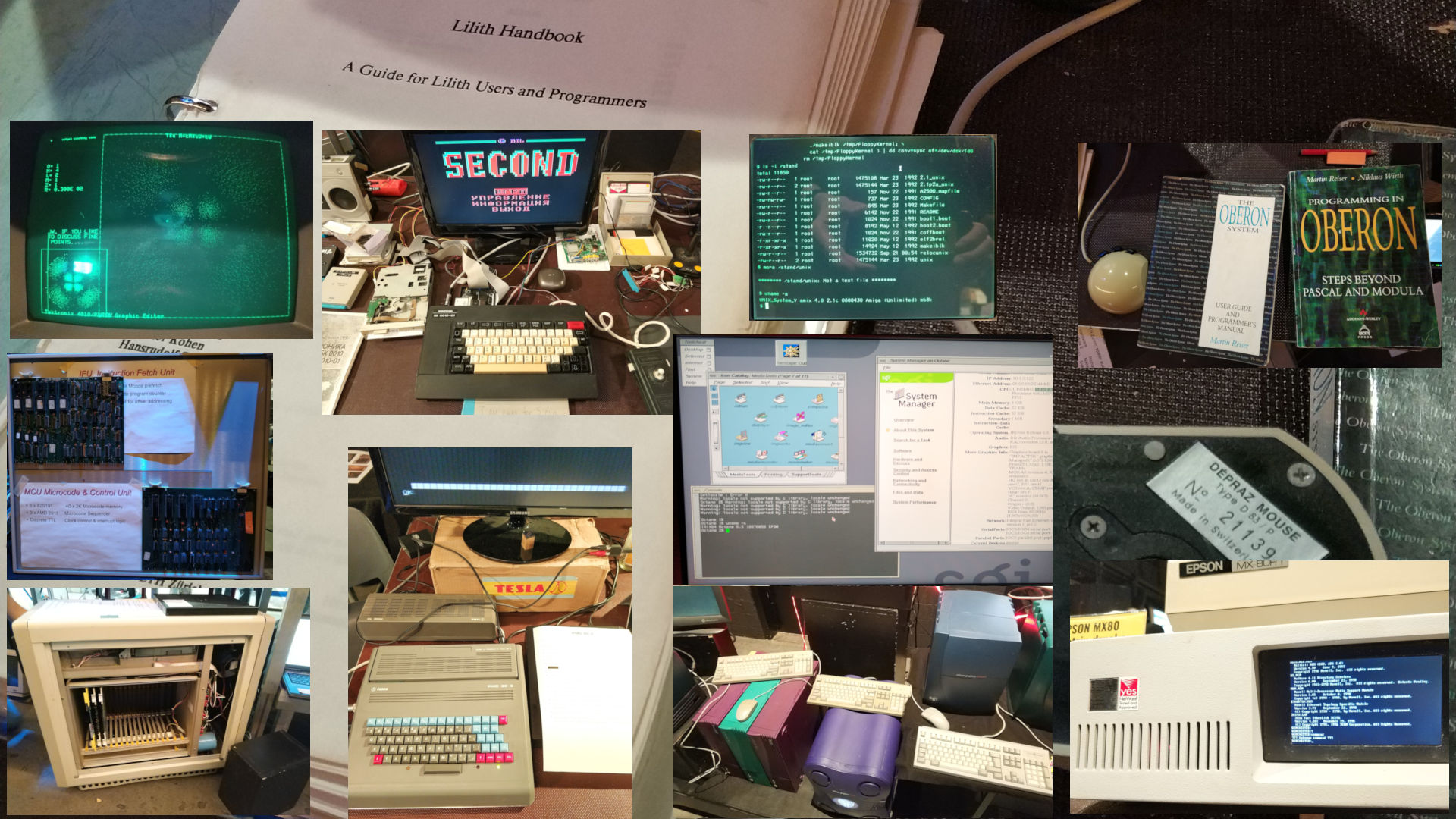

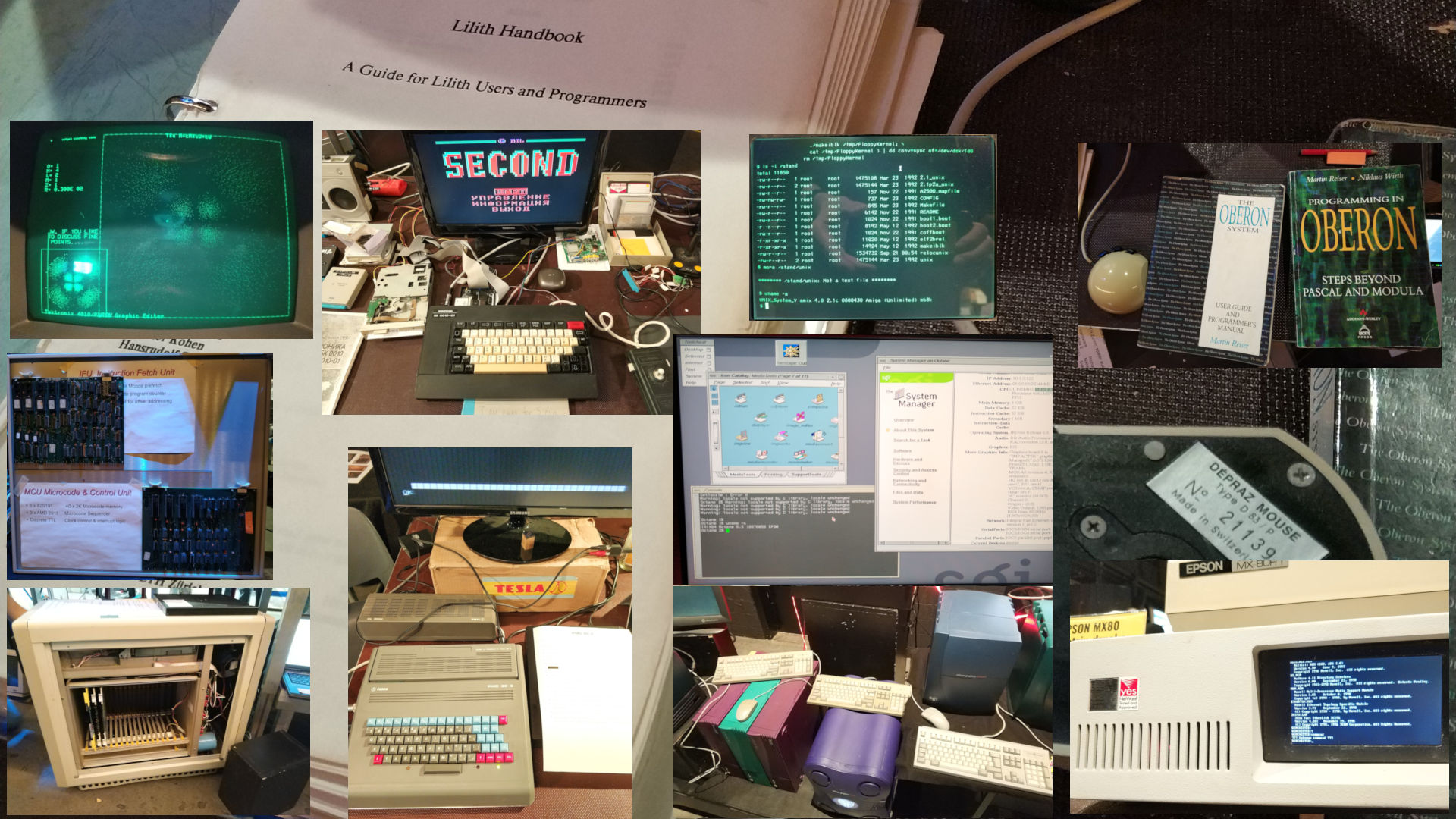

Three years after our last visit, my girlfriend and I went to Zurich again for the Vintage Computer Festival (vintagecomputerfestival.ch). Not only have we met some of our friends and fellows, but also seen a lot of machines from the past, a few of which we had not even heard of before. And we attended a talk on the Multics operating system which we had known to have had a lot of influence on Unix and other systems later, discovering that its sources had been released eventually and there are still lots of people interested in it (multicians.org).

One highlight for me was holding an actual Depraz computer mouse in my very own hand, which I had only gotten to know a year ago thanks to Ron Minnich passing one to John Floren (https://jfloren.net/b/2021/8/6/1) that had been used at Bell Labs - yes, THE Bell Labs. We talked to many of the exhibitors, and one of them had brought a БК-0010 Soviet home computer from the eighties (http://www.mailcom.com/bk0010/index_en.shtml), a PDP-11 compatible for which he had a floppy with a very minimal Unix port, bkunix. I could successfully run the `cal` command on it to show the current year's calendar, and that felt amazing. :)

On Saturday last weekend, I attended the Vintage Computing Festival in Zurich (vcfe.ch) together with my girlfriend. An exhibition hall full of old computers and gaming consoles expected us, featuring machines (or parts in some cases) from IBM, HP, DEC and Apple, the obligatory Commodore C64s and Amigas, some other home computers and gaming consoles from the 90ies and 2000s, and a whole bunch of people kindly explaining what they had brought. Besides the exhibition, there were huge posters for sale listing milestones of the digital evolution (computerposter.ch) of which I bought the 2016 version, tiny self-made chocolate floppy disks, a good selection of talks, and finally, a one-hour C64 demo roundup from the previous years. We attended a talk on the DEC PDP-10, for which the speaker had recovered many parts of the Incompatible Timesharing System (https://github.com/pdp-10/its), so it can be run in an emulator, and an improvised talk on the Atari 2600. I also took the chance to talk to Lawrence Wilkinson, who had famously preserved an IBM System/360 frontpanel and reimplemented the underlying system through an FPGA (http://www.ljw.me.uk/ibm360/). The machine is now more than 50 years old, so it was quite impressive to see it working right before us. My girlfriend asked about the counters on the panel, and he told us they were for counting operation time, because back in the days, customers were charged based on time units of usage, i.e., it is very much the same payment model as with cloud providers these days. I had to smile about that. :)

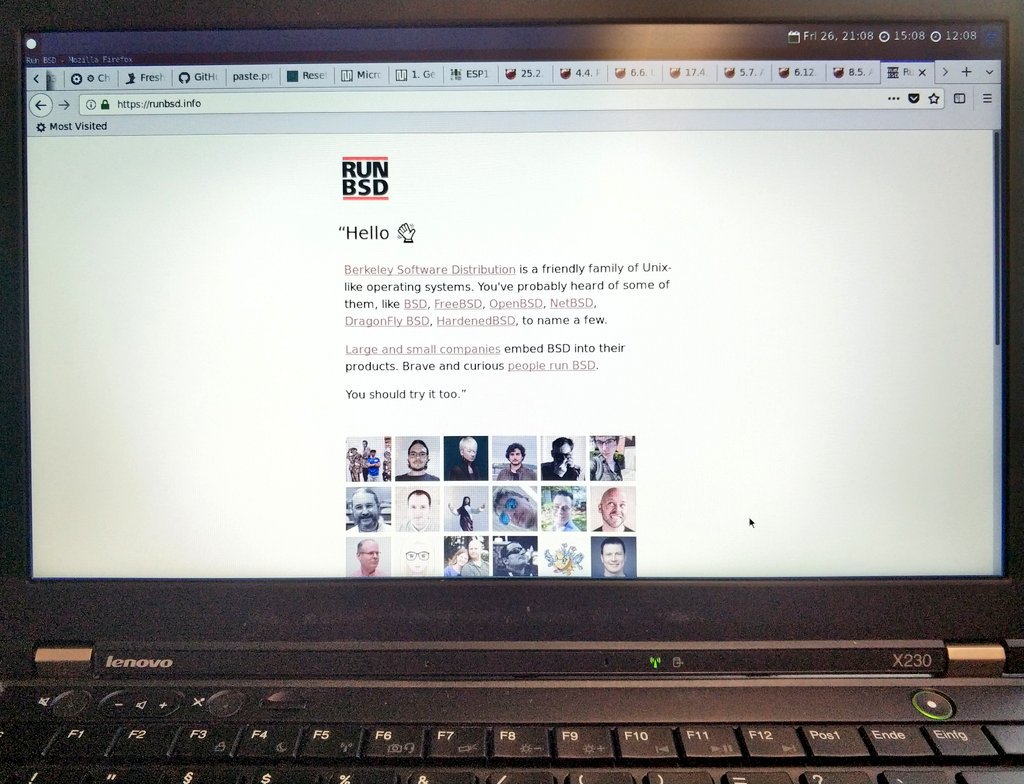

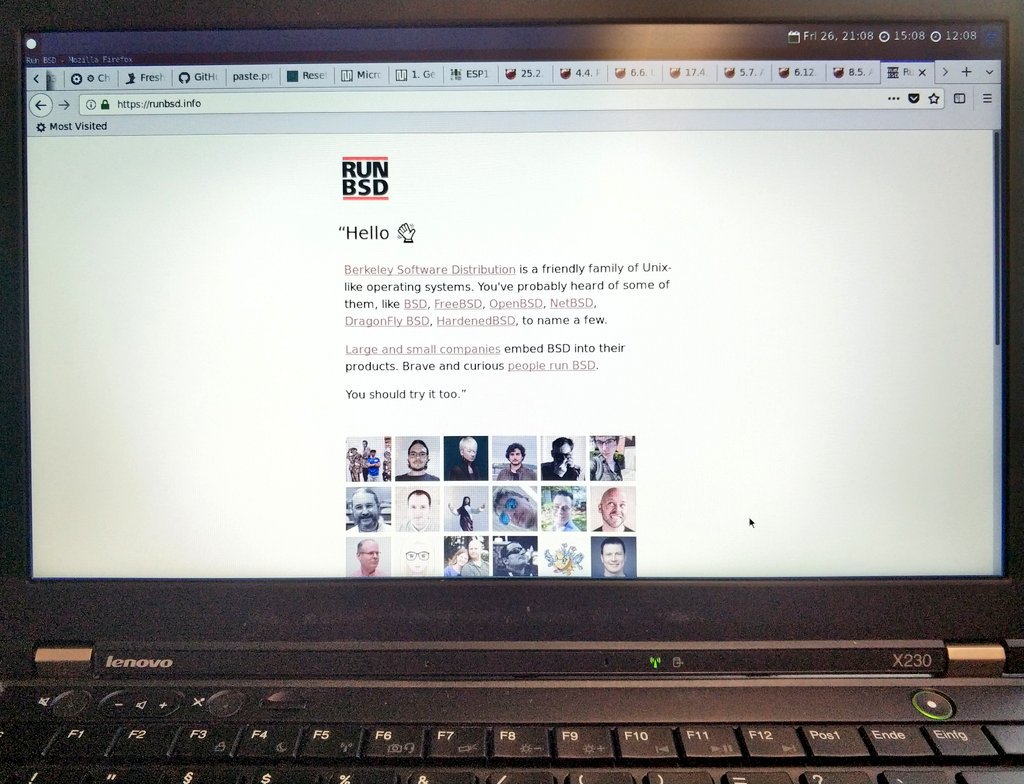

There is an ongoing joke about the "Year of the Linux desktop", even though it has been there and working well for at least a decade already, just not very mainstream. People who know me also know that I like free and open source software for its usability rather than pure ideology, and I also like to try many different things. So I played with many desktop environments, apps, shells, and operating system distributions, including non-GNU/Linux based ones. I like questioning and exchanging about problems, contributing to packages and documentation, and getting things to work. Recently, in a FreeBSD group in a social network, someone asked what we use FreeBSD for. Here is my answer:

I run FreeBSD on servers and play with it on a laptop, but not my main laptop. I prefer Linux with systemd and GNU coreutils because of speed and convenience, i.e., NetworkManager, PulseAudio, utilities like cp behaving as expected, and everything just working. On a FreeBSD desktop, I face too many issues with battery state not being available unless I build the kernel from source or wait for another release, touchpad being wonky, some graphics issues with OpenGL not being able to initialize quite often, audio being weird (e.g., switching to headphones with sysctl and restarting the app producing the sound), Bluetooth audio not being available because the necessary port doesn't even build with the configuration needed, suspend/resume sometimes just shutting down instead of resuming, and Wi-Fi requiring netif restarts and getting authentication errors frequently.

My reply was quite concise and less fully explanatory of course, so I hope that it just delivers an impression of the challenges one will face when trying FreeBSD on a desktop. Eventually, I am certain that with more further work we will get FreeBSD to a point where it can compete with a GNU/systemd/Linux-based desktop. Even though many things already work very well, I am hoping for more adoption from the GNU world for a boost. And Inferno, Haiku and Hurd are even further behind than FreeBSD, so it remains the only other option. Other alternative, proprietary OSs, unfortunately, are way worse. That's why I still give FreeBSD a try.

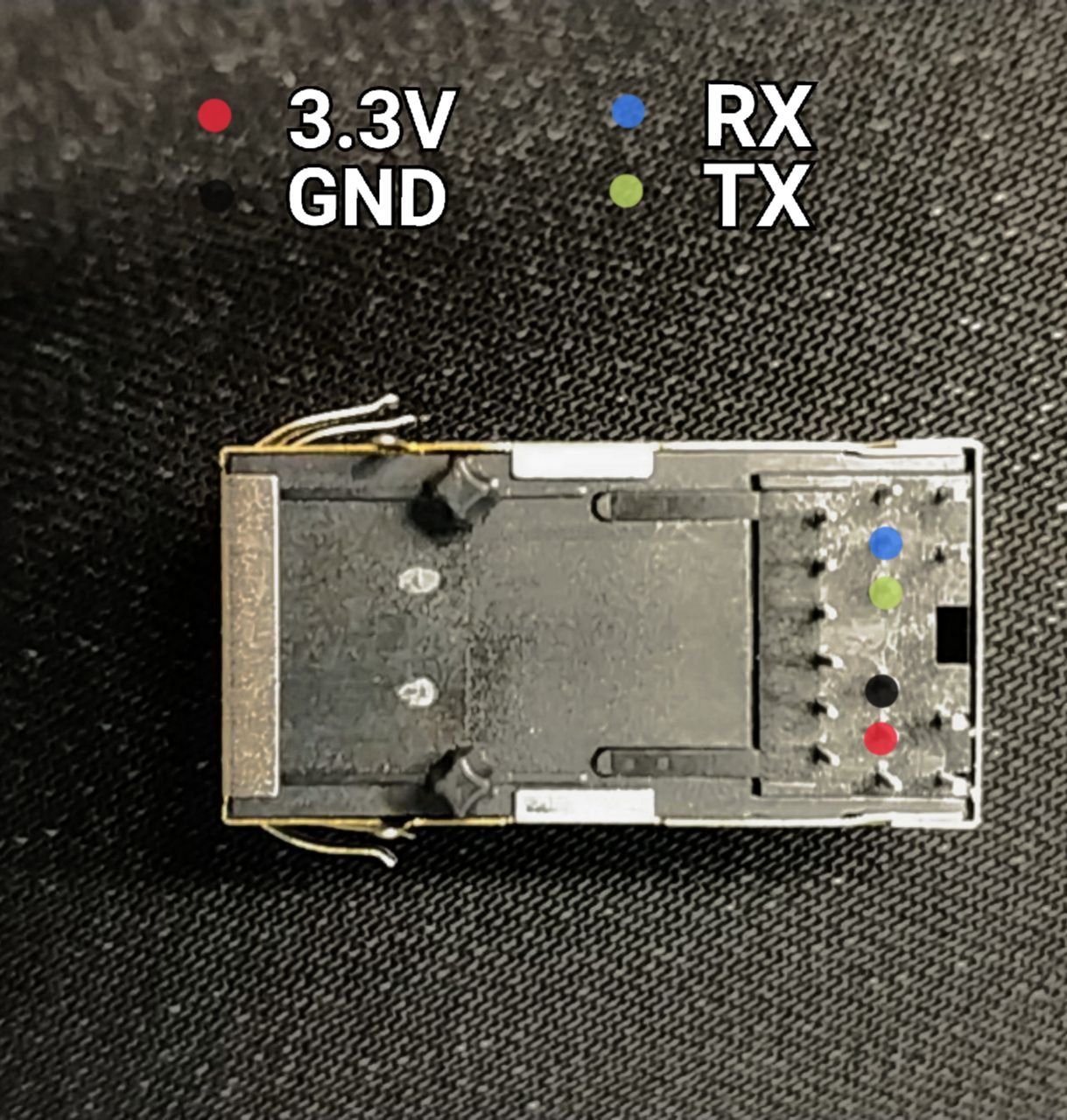

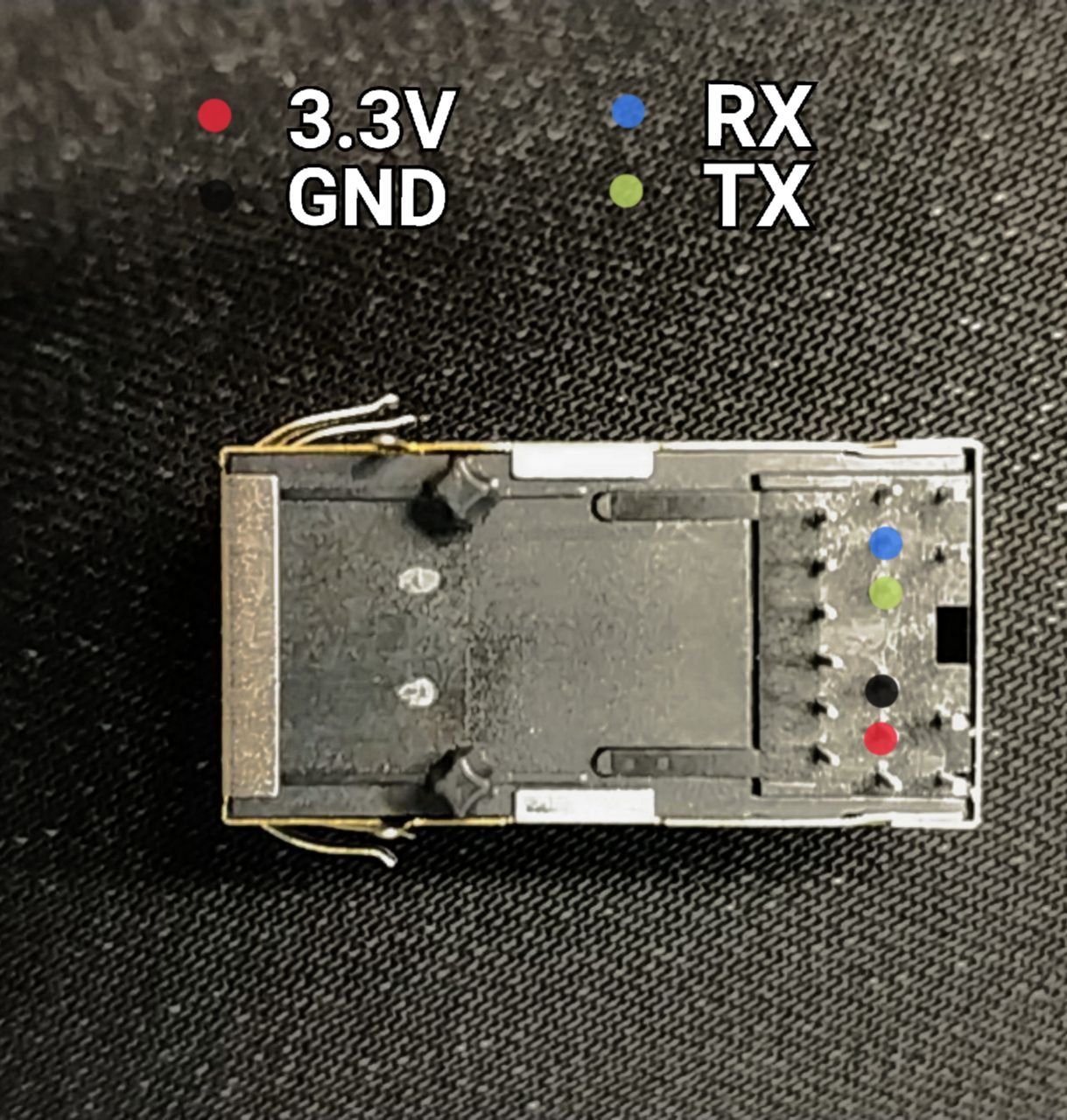

A friend of mine bought us two Eport Pro-EP10s, which are little Serial<->Ethernet devices running Linux on MIPS with 16MB of flash memory and 32MB of RAM. There are some downloads available at http://www.hi-flying.com/eport-pro-ep10 which include an SDK, the manual, a firmware release, and some more stuff. We played around a bit and figured out some details following the manual. Here is a little summary:

First we simply powered the device. Notice it has to be 3.3V, which you can get from some USB<->serial adapters like FTDI ( https://www.adafruit.com/product/284 ). Then we plugged in an ethernet cable, and the interface on our laptop indicated that we were indeed connected. However, the LEDs on the EP10 did not light up. We opened one up to check and saw that the pins are actually not connected. They are the ones at the very end and wiring them up is optional, which the manual also mentions. Anyway, we didn't receive an IP address either although we expected it, so we looked at the manual again to figure out what the EP10's address may be and configured one for our laptop manually. Two possibilities were mentioned in the manual, and for us, one of them worked. Then we quickly ran nmap, took a glance at the web UI, and took notes:

- the IP address of the EP10 is 169.254.173.207

- there are four TCP ports listening by default: 23 (telnet), 80 (web UI), 2323 (some sort of command tool with a login), and 8899 (which outputs to UART0)

- our firmware version is 1.33.5

- our build version is build1808171357333996

As already mentioned, there is a special service on port 8899. So we ran a little experiment: We connected the RX and TX lines to our USB<->serial adapter and fired up both netcat ( nc 169.254.173.207 8899 ) and minicom ( minicom -D /dev/ttyUSB0 ). Note that we are running a Linux kernel on our laptop. On a different kernel/OS, you may need to use different tools, e.g., PuTTY for the serial connection, and choose settings accordingly. Eventually we could type into netcat and saw the output in minicom and vice versa. :)

We also tried connecting through telnet and entered some commands to see what it offers, and we could read and set some IDs, get the firmware version again, restart, and run a few other commands. However, as that wasn't too exciting, we went on with our research.

Apparently, there are some more downloads available from the Baidu cloud, which we couldn't get on with so far because they only offered some extra downloader software for Windows operating systems and we couldn't find an option for a direct download. There are hints on the firmware being based on OpenWrt, so we will look for options to get SSH onto it, maybe Mosquitto for IoT fiddling, and maybe a way to install our own firmware, ideally an actual upstream OpenWrt release.

Three software projects have made decent new releaeses:

- Lumina Desktop 1.5.0 ( https://lumina-desktop.org ), a Qt-based desktop environment coming from the TrueOS project

- Quaternion 0.0.9.4, a Matrix ( https://matrix.org ) chat client, also based on Qt

- Hyper 3 ( https://hyper.is ), an Electron-based terminal emulator by Zeit, rewritten to WebGL

I am especially excited about the first two, because I just love Qt apps. They are fast, good-looking, portable, and easy to build. I always took the latter as a joke to be honest, but it seems like they are being very serious after all.

This year I went to the Revision demoscene party for the second time ( revision-party.net ). I also submitted my first demo ever, a little framebuffer animation running in the firmware on coreboot and SeaBIOS. That was a lot of fun, especially making it work on real hardware (Lenovo X230).

One of my favourite parts of the parties is definitely the live music acts. Lots of people are gathering by stage then to dance like there is no tomorrow. And of course it's always great to meet other people, especially from so many different places of the world, all coming with a shared mindset and the common goal of creating awesome demos.

Yesterday was the first spring(); break; - a new event at Das Labor, our hackerspace in Bochum.

Besides many interesting talks and workshops, we had a hands-on with coreboot and flashed it on two laptops which are now happily booting faster with the open source firmware. Also, for the first time, we had a non-Thinkpad device: a HP EliteBook 2570p.

View of the rocket from the hotel :)

This year, I'm in Hamburg again, but this time I couldn't get a ticket for the actual Chaos Communication Congress (33C3) because it was sold out. :( However, some folks organized an alternative event in the hotel just next to the Congress Center Hamburg, namely Alt33C3 (see www.alt33c3.org). It's crowd-funded, small, and just a blast! We have a very cozy room, set up projectors and FM for the live stream and audio transimission, and of course a lot of fun! And the best part: We have dedicated extra talks which are exclusively for us. <3 :)

The biggest issue we have on GNU/Linux system is the question 'How do you (install/run/configure) X on distro Y?' (and often the answer to it).

We have awesome package managers, distros, communities, people, helper tools and infrastructure. But one issue is still not solved: Maintainers / packagers. We desperately need people who work on the actual packages. Now we see curl-pipe-sh, virtual machines, docker, snappy, and a lot of other hardly maintainable, irreproducible bullshit. I remember days when I actually read from ISVs that installation meant './configure && make && make install'. You just needed a toolchain. No fancy tools, nobextra terabytes of disk space and memory, and - well - not even a package manager! The latter, however, does something very excellent: It can handle dependencies and track what you have installed. Awesome! However, the assumption is that people create metadata for it. In every package management system, you need to specify a name, version, dependencies (based on the names others use), and build and install commands. That can become way more complex than make && make install nowadays though: For some distros, patches for locations in file systems are necessary, the overall system's configuration may cause collision (say, certain presets, or basic tools like libav vs ffmpeg, libressl vs openssl, openrc vs systemd, implying even more issues with udev, some other daemons, and what not), etc.. For all this extra work, we need people, and we need endurance, knowledge, and strong opinions to keep things aligned. Arch Linux is doing very well here, and it's why it's mostly my distro of choice. And it might be the reason why it is now considerable as a major distribution. There are even different flavors of it/Arch-based distros avaiable like the prominent Manjaro, Antergos, Chakra, Alpine, and more, as you can see here: https://wiki.archlinux.org/index.php/Arch-based_distributions

Yet these are not officially suppotted by Arch, unlike the Ubuntu GNU/Linux flavors like Kubuntu, Xubuntu, Lububtu, Edubuntu, etc.. Canonical Ltd. is actively supporting and even QAing/testing this whole family. Both ways are ok in my view. One means focus (they main driving principle behind are is KISS after all), the other one means looking at the big picture and being omnipresent.

Now still the aforementioned big issue is not solved. So grab a cup of coffee or tea, a cocktail or a beer, calm down, and please, help us out! It does not matter which distro or desktop you prefer to use. :) <3

Packaging is the ideal way of bringing software to users. In the FLOSS (free, libre, open source software) world, people can just query a repository from a central tool they know well without visiting obscure websites and simply install things using a single command or click, just like an app store. This tool is called the package manager. It is a trustworthy, convenient, and easy.

However, someone has to do the work behind it, and that is usually another user who wants that certain piece of software themselves or who is asked to do it, someone working in a distro's packaging team, etc..

Now, to actually be able to create a package, the packager will have to know how to build and install the application. Besides that, there are different package formats, helper tools, and standards, depending in the distro.

If you would like to have a package for your system, I stongly encourage you to at least give it a shot, because you can do it easily in surprisingly many cases. Read the guides and tutorials provided in the respective wikis and documentation of your distro or get in touch through IRC or forums.

Many packagers have certain expertise in special fields like Python, Java, KDE, Qt, GNOME, desktop stacks, server applications and so on, and they are willing to share so the community grows and get help themselves.

If you ever read that you should pipe scripts fetched through wget or curl into a shell like bash: That is certainly not a reasonable way of installing software.

It might seem easy because you just run a single command, but you don't know what will actually happen, if it will harm your system (even if the author does not intend it), and it will be very hard to remove it later if you don't want it anymore because your package manager will not even know about it.

You cannot even keep track of things if you don't maintain a list yourself, and probably you won't even have a comfortable desktop shortcut.

These were experiences I made myself and the main reason for me to get started with packaging. Grab a piece of software you want on your system and package it, and I even promise you that it will give you a great understanding of FLOSS software in general, so you and others will benefit in multiple ways.

Last year, for the first time, I attended DebConf, the annual Debian developers conference. Since it was held in my country and a very great chance to get to know the large community behind an awesome open source project, I couldn't miss it, and I am very happy that I had taken the chance.

I was more than impressed to find a real family event (lots of parents came with their children), a very calm and relaxing atmosphere (we had a whole day to discover the beautiful city of Heidelberg), and a very ambitious team at the venue. Having taken away so much from the event, even though I'm not even much of a Debian user, I want to share my insight with the world. Let me summarize some ideas and a very meaningful chat we had with Jacob Appelbaum after his talk, because I agree with many of his views.

Hardware issues with x86 aside, he insists on the liability of operating systems and especially secure communication and privacy. He would love to see a Linux kernel patched with grsec (thus also PaX for memory protection, ASLR etc.) and configured to support AppArmor in the default repository of Debian and protocols like Appletalk dropped from it instead. Connections during installation should be encrypted by default with non-superuser network access, and services like NFS and Avahi removed from the base system. That would be similar to the setup of my Gentoo Linux system. Debian is a very robust distribution, empowering TailsOS and almost half of all distributions currently out there (just check the Linux family tree on Wikipedia), so securing the system would automatically contribute to the safety of so many machines in the world, especially servers. On the other hand, stability in the sense of compatibility is just as high a priority, if not even higher, so that packages cannot simply be dropped or kernel features changed. PaX/grsec can easily render many binaries inexecutable. Offering another installation set would be an option, but quite hard to maintain with the desired properties as described above. From 5 years of experience with Gentoo I know how much effort it takes to patch and build a kernel over and over again, so Debian cannot be blamed for not just doing it. Another aspect Jake stressed is compartmentation. I was first thinking about approaches like the Xen hypervisor in QubesOS, but that is not what he meant. He would prefer jails and tools written in languages like Go to make use of the built-in type safety.

These features would already be a huge challenge to implement, but he also suggested a sensible set of packages to provide by default to begin with: The Tor Browser and the Tor Messenger (he described its features and a beta version was released by the end of the year) or Ricochet (another secure instant messenger).

Those were very technical details, but Debian means a lot more on the ethical and social side. The Debian community has carefully established a web of trust for communication, contribution and maintenance. Not only do they keep discussions very modest, but also authentic. During chats I learned how their key-signing parties work. Checking identities (by means of passports or ID cards) is a crucial and mandatory part of the procedure, so one can feel very safe in their environment.

Finally, here are three quotes from Jacob that touched me the most:

'Debian does a lot of stuff right.'

'[The] main thing is to keep quality assurance [...] at a level.'

'We should try to build a world where we are free.'

I am reading this poem by Georg Trakl:

Verflucht ihr dunklen Gifte,

Weißer Schlaf!

Dieser höchst seltsame Garten

Dämmernder Bäume

Erfüllt von Schlangen, Nachtfaltern,

Spinnen, Fledermäusen.

Fremdling! Dein verlorner Schatten

Im Abendrot,

Ein finsterer Korsar

Im salzigen Meer der Trübsal.

Aufflattern weiße Vögel am Nachtsaum

Über stürzenden Städten

Von Stahl.

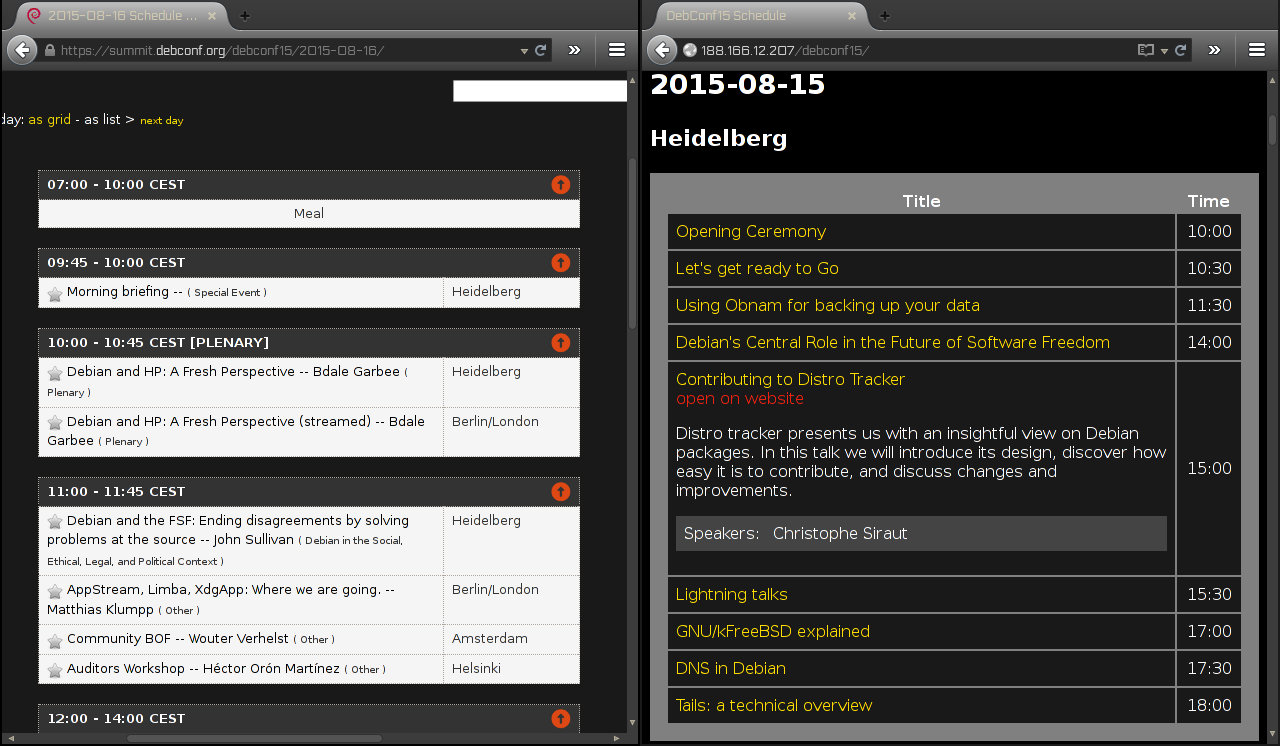

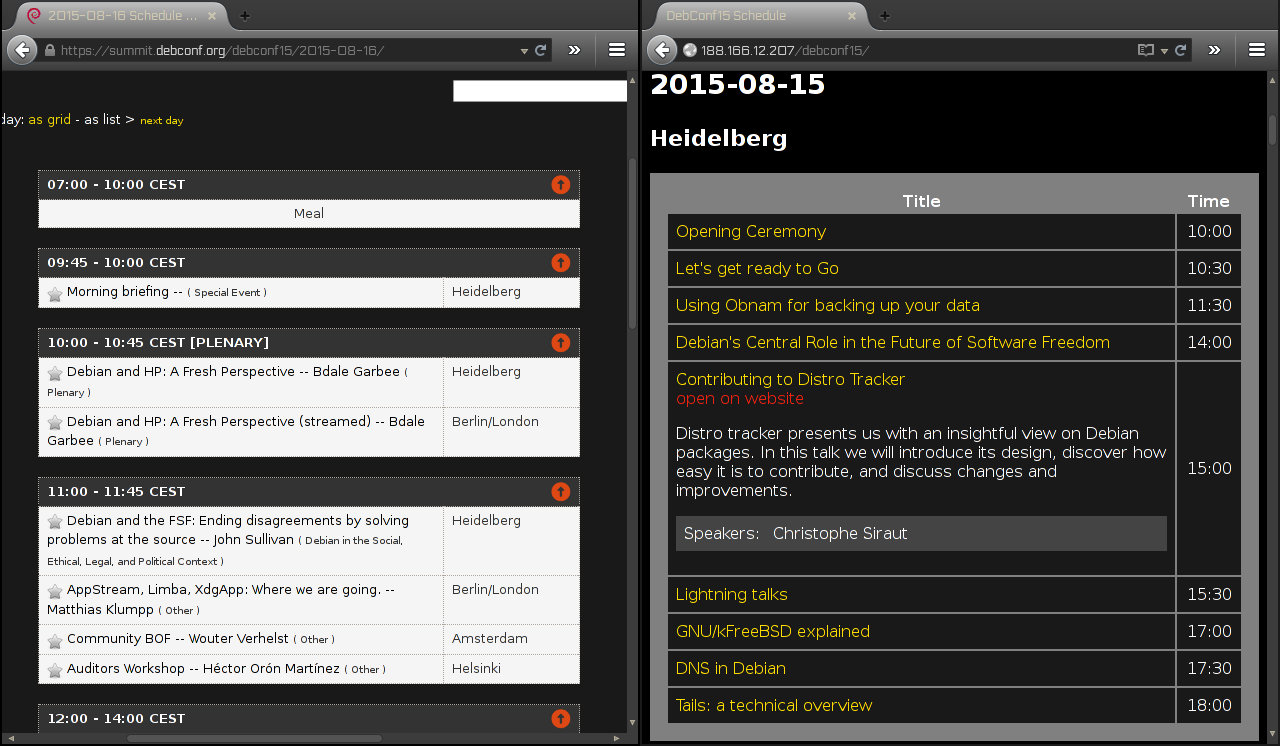

I have quickly created a mobile-friendly version of the schedule ( http://bit.do/debconf15schedule ). See this screenshot for a quick comparison.

The app based on AngularJS and uses the XML export from the official website ( https://summit.debconf.org/debconf15/ ). Since Summit doesn't send CORS headers I set up a cron job to keep it up to date, refreshing every 5 minutes.

The project can probably be used without much modification for other conferences as well. I was told that there is also an Android app that can read the XML format, but I wanted a web app, so here you are.

Sources are on GitHub ( https://github.com/orangecms/conf-schedule ) and PRs and feedback are always welcome. Enjoy! :)

This year's DebConf is taking place in Heidelberg, Germany, so I have taken the chance to attend and get to know some Debian folks. Apparently they are very open and welcoming people, and to even push that further, there has been a session for newcomers to meet and get to know each other as well as speak to some recurring visitors. This year they set a new attendees record (~600) and some famous speakers have been invited. Among them is Jacob Appelbaum whom I shall invite for a bottle of Club Mate, should I get to talk to him.

I'm looking forward to enjoying the rest of the conference and shall write about it every now and then, so stay tuned!

Do you also love cocktails as much as I do? I am always extending my equipment to make them.

Here we have this delicious delight:

Clover Club (main picture)

Gin Rickey (lower right)

Cheers!

Mingle eggs with pieces of garlic and chili in a cup. Spice up with salt and pepper as you desire. Put moderate slices of eggplant and/or zucchini in a pan. Start heating the pan and add a spoonful of the mixture on top of each slice. Fry from both sides.

Tada, you'll have hottie-veggie-tasties! :)

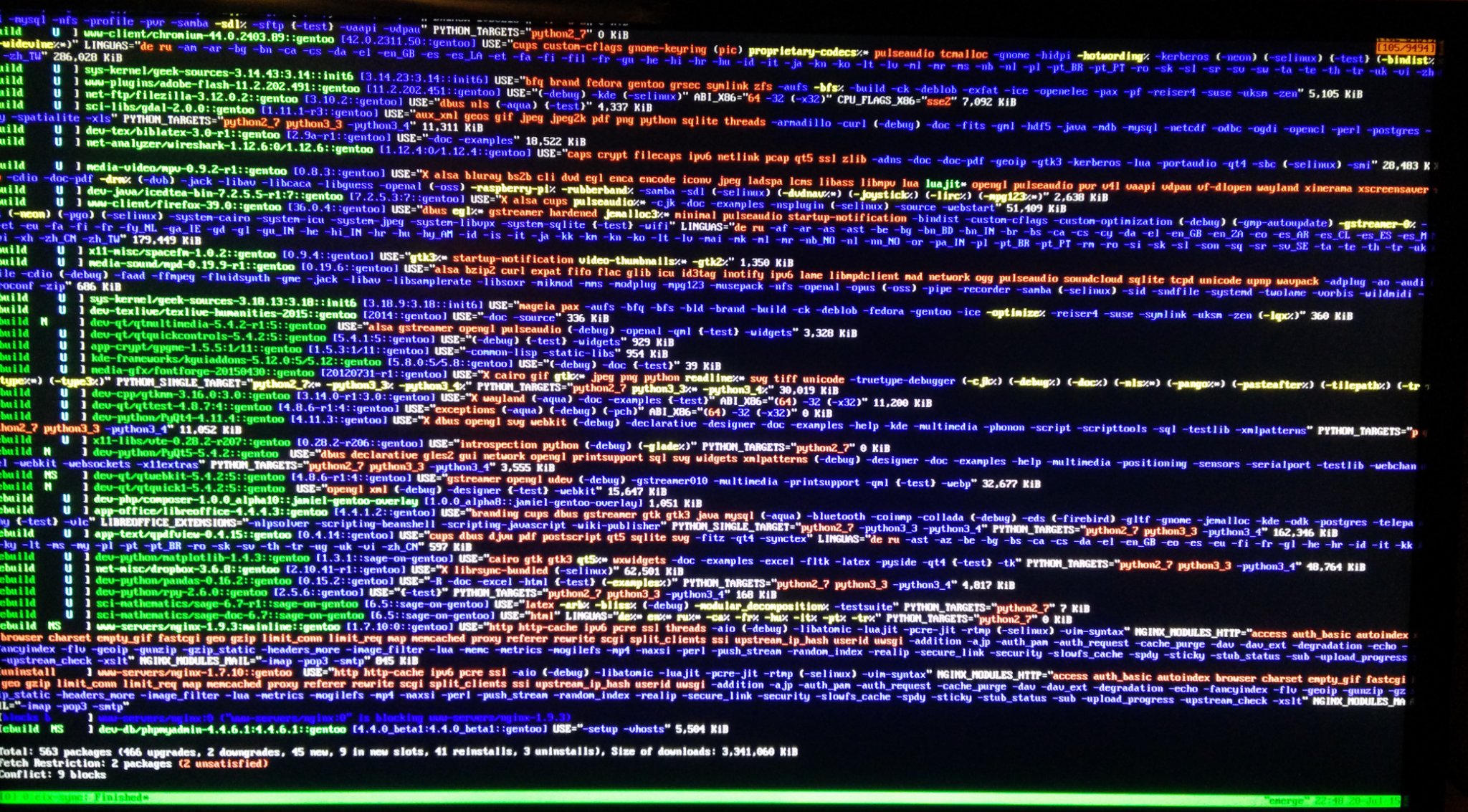

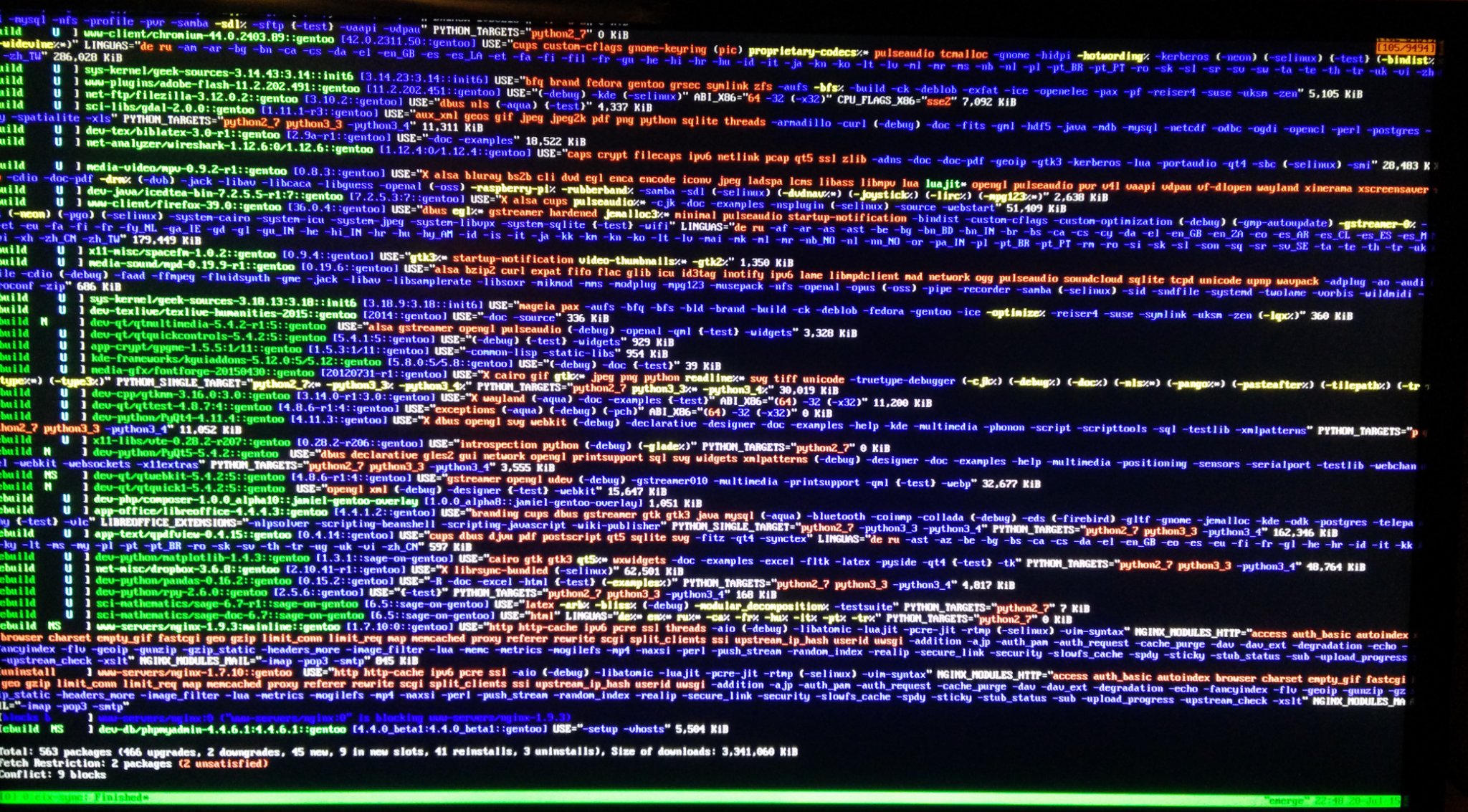

... and here is a photo of what it looks like when upgrading Gentoo. Colourful, powerful, flawless.

People who know me might think I'm a total nerd, hacking around and stuff. After all, I'm just simplifying things. And that is the reason why I love Gentoo Linux.

I have told this story many times but never published it like this:

I got started with Linux back when I was in 11th grade I think. A school mate told me to try it out, and that was when Fedora Core 1 was just released in a beta-ish stage. It was pretty horrible, because I was presented with a GUI without proper resolution nor hardware acceleration. I played around with it and couldn't get it to work nicely. So I tossed it away after a while.

Years later I got myself a NAS (Network Attached Storage), a Buffalo LinkStation. I could gain shell access and do some stuff with it, but I didn't know what I was really doing mostly. When the NAS died (stupid thing - the cause might have been the improper firmware I was using or other random circumstancea), it's been another couple of years until I heard about Linux again.

This time it was a fellow student at university who is now a good friend of mine. He recommended me to go with Ubuntu first and see how it works. And to be honest, that was a big, big disaster once again. I was facing an even more complicated GUI than I had ever seen (named Unity), and after trying to install the software I actually wanted (that is, Sage for some courses where we used it to break crypto), I was unsatisfied again. It took me only a short amount of time to break the system by mixing a mere dozen repos. In summary: I hate distros that are based on hundreds of binary repos and don't officially feature what you actually need. That is true for about every rpm- or deb-based distro out there.

My friend then said that Gentoo Linux ( https://gentoo.org ) would be real hardcore, tough work and hard to get through maybe, but highly customizable. Hell, bullocks. It's the simplest, most convenient, most stable system I've ever seen in my entire life! I just write some stupid, simple text files (KISS), and the system builds an entire world of software packages for me (that's the terminology). And it has never, ever failed or broken anything. Isn't that amazing? Portage, the ports/package manager behind the scenes, is such awesome in resolving dependencies, and the Gentoo folks are writing insanely great ebuilds (package descriptions) so that I can just lie back and have my machine build a bunch of like >500 packages for me without trouble, just like today. I'm now upgrading my system after about a month break. It works like a charm!

On my laptops I am using Arch Linux nowadays because it has binary repos (very few but rich ones though!) and is similarly convenient when building packages locally. I say: CoC to me means "Convenience over Complication"!

I've written some few package descriptions for both Arch and Gentoo. You can find them on GitHub ( https://github.com/cyrevolt ) or even in the AUR, the Arch User Repository.

Andy and I are watching Google I/O 2015 right now.

They have significantly enhanced Android's UX, involving text input, text selection, and even voice input in a certain context. Plus they simplified voice control again and finally provide app permission control by default as you know it from CyanogenMod. And they are promising more battery life by measuring usage times, a feature they call "Doze". Hopefully that will all work as expected.

On my way back home from oSC15, I met a nice German girl in the train and couldn't resist chatting with her. When she asked what I've actually been doing in the Netherlands, I told her that I had attended a conference about Linux and stuff. She's been running Ubuntu on her laptop for quite some years, she said, and that many of her friends do so as well. "Everyone by now knows Ubuntu, right?", she replied when I was curious how she got in touch with it. But she had never heard about openSUSE until then.

I started asking myself if the community was willing to change that by opening up and telling the world that the project is still alive. I'm very confident that the new website will help spreading the word, but there's way more to be done: Wake up, activate, help improving the wiki, invite your friends and enemies, and get ready for the next release!

The network has been taken down. A marvelous oSC15 / Kolab Summit double-event is over now, and I say thanks to everyone who participated, especially the organizers and even more the volunteers who managed to get everything going. I hope to see many of you again soon, maybe this year at DebConf or next year for another round of openSUSE Conference, oSC16 at home in Nuremberg.

I am glad to say that I have learned so much about message brokers, packaging automation, and configuration management. But having met so many awesome people was surely the best part of it. Keep up your great work and continue being this wonderful and welcoming community. Tot ziens!

Today morning, we've been openly discussing the current state of and plans for openSUSE. Without many people knowing, openSUSE is doing an amazing job by packaging very recent and high quality software in light speed, thanks to openQA and OBS, the Open Build Service. Both are very great tools, and both allow us to advance and save a huge amount of time. They are like emerald and diamond castles.

Now that openSUSE has opened the doors to these castles, the need to prepare the paths that lead there arises and is right now becoming very urgent. The wiki needs updates and maintenance, and the board members understand that. The website is about to be relaunched, and it demands content, which unfortunately isn't all there yet. They are aware of that fact as well. And they say: We have to work on that. And we need people!

Establishing a platform for (new) contributors requires hard and tedious work, which you can actually help with.

If you love openSUSE, you just tried it, or you would like to take a look at it, please go ahead - contribute, give feedback, download and boot the iso images! And remember: Have a lot of fun!

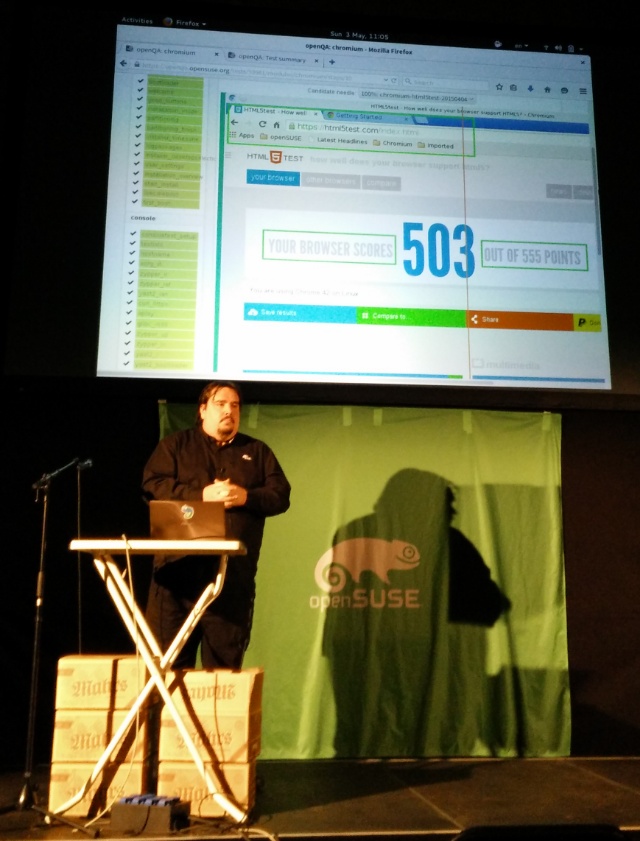

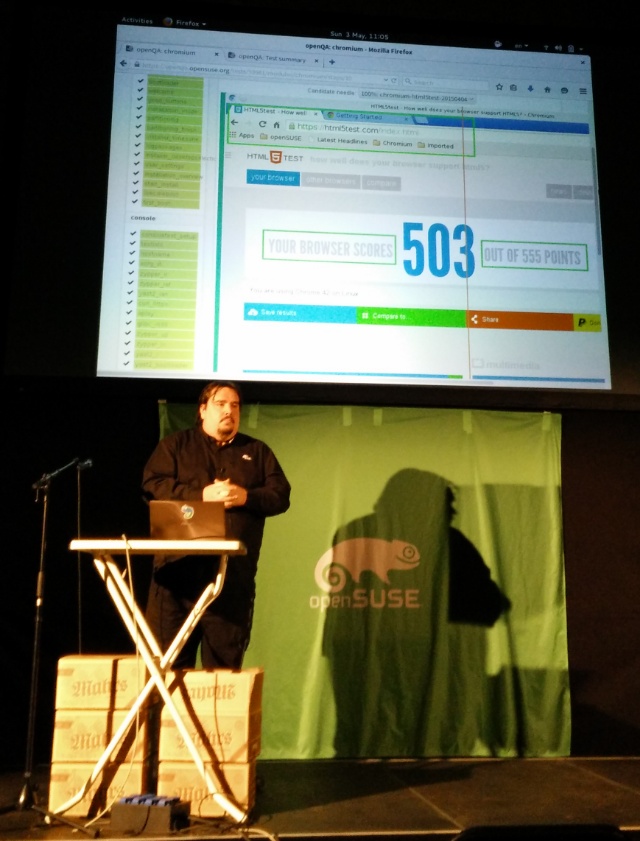

To me as a web developer, this screenshot is just too funny. For those not in the know: 503 is the HTTP status code meaning that something on the server went wrong.

Anyway, Richard Brown just presented how openQA can be used to test apps, installers, console output and whatnot. It does automated keypresses and mouse clicks, taking screenshots and partial image comparison (defined as "needles") and outputs the results in a nice web frontend. All the capturing happens from a virtual machine, so you can easily test different architectures and monitor the whole boot process. As a bonus, you can watch the test running and even get a video recording of the whole suite in the end.

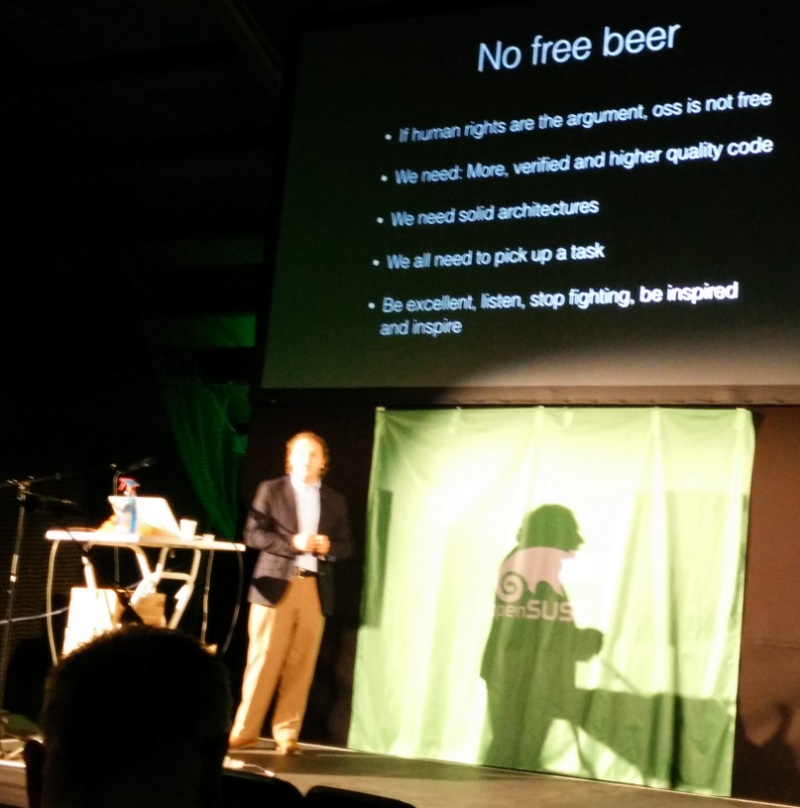

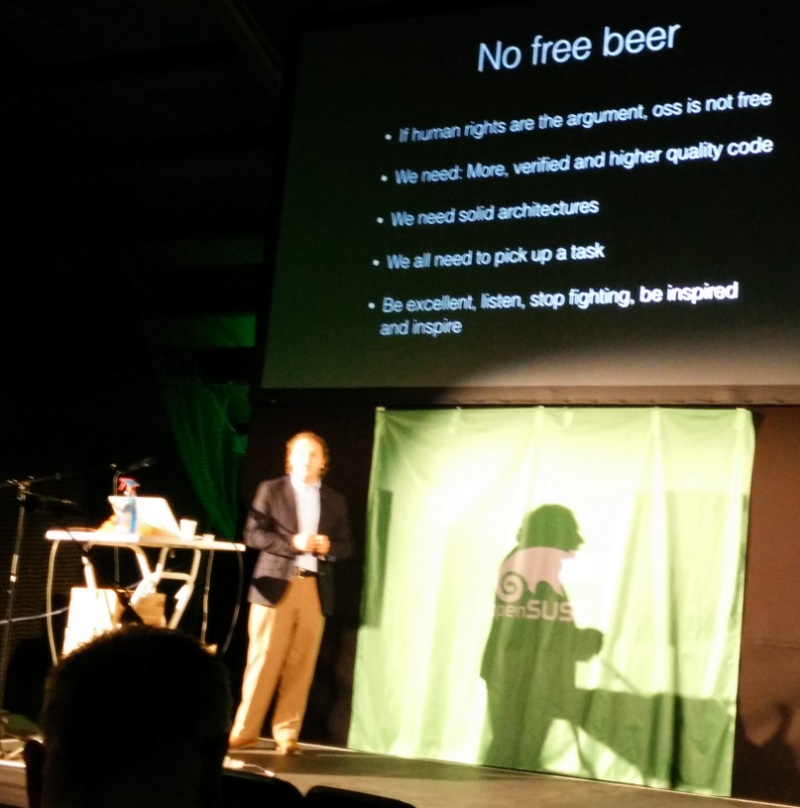

says Brenno de Winter, and I totally agree. Too much software is poor, not because it is built on top of open source software, but rather because it is not verified. If you use free software, you are obliged to check that it works properly and securely, just like you do with proprietary software. That is exactly my standpoint and the reason why I want to see more testing and CI/CD processes implemented. I am a huge fan of TDD for that reason, and BDD in addition. But that isn't everything. You must never forget pentesting, i.e. checking weird cases but also - in especially - very common attacks and techniques. Thankfully, OWASP (http://owasp.org) is regularly publishing a top 10 list of common issues, and many security people are blogging about their expertise, like Gareth Heyes (http://thespanner.co.uk) for example. Many put their talks from conferences online, in forms of recordings, slides, demos and so on. So please, open up your minds and wallets a bit to provide more secure software. You *can* do that for sure, no excuses!

The sun has risen, the birds are singing, and a bunch of geeks is gathered in a sports center for the second day of oSC15. Today is also the first day of Kolab Summit, and we're both gathered at the same venue, allowing for more exchange amd enabling each other to join. Kolab Summit is mainly about healthcare, security, and groupware. I'm partially a security guy, so I might attend some of their talks as well. Oh, and we are sharing a common Wi-fi! :)

... somewhere in Den Haag, The Netherlands, Ramon de la Fuente is speaking about Ansible, a configuration management, deployment and provisioning tool that I am starting to like. When working on PHP projects like Shopware, I got in touch with it for the first time. Shopware provides a Vagrantfile (Vagrant is a virtualization wrapper, sort of) for developers that provisions through Ansible. As my projects grow and become more, I want to be able to easily set up a new system. That makes Ansible my tool of choice for now.

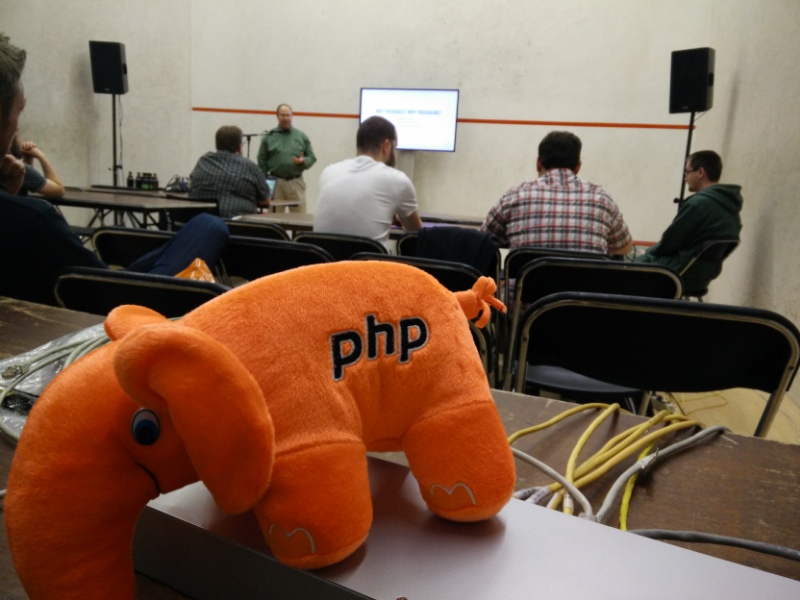

In the world of Linux, *BSD and whatnot, packages are a core feature to deliver software to end users. Craig Gardner, Tomáš Chvátal and Lars Vogdt are explaining how it works while the elePHPant is sniffing on the network.

We just quickly set up the workshop room and the final schedule is now available at https://events.opensuse.org/. If you aren't here but want to follow the talks, you can watch them through the livestream at http://bambuser.com/channel/opensusetv.

Good morning everyone, and let the show begin.

Hello everypony,

this year I am attending the openSUSE Conference again and this time I will be blogging live from there. :)

If you want to follow me, feel free to add the RSS feed or just come here every once in a while. Yay! =)

One core Unix idea was to have a toolkit of sorts, with many little tools and utilities that would do one thing each and do it well. They were designed for a monolithic system environment, working nicely by and among themselves, and with sufficient documentation and experience, the end user would be very productive.

One core Unix idea was to have a toolkit of sorts, with many little tools and utilities that would do one thing each and do it well. They were designed for a monolithic system environment, working nicely by and among themselves, and with sufficient documentation and experience, the end user would be very productive.